Merge branch 'main' into day-86

This commit is contained in:

commit

e2b73f59df

5

.vscode/settings.json

vendored

Normal file

5

.vscode/settings.json

vendored

Normal file

@ -0,0 +1,5 @@

|

|||||||

|

{

|

||||||

|

"githubPullRequests.ignoredPullRequestBranches": [

|

||||||

|

"main"

|

||||||

|

]

|

||||||

|

}

|

||||||

4

2023.md

4

2023.md

@ -156,8 +156,8 @@ Or contact us via Twitter, my handle is [@MichaelCade1](https://twitter.com/Mich

|

|||||||

|

|

||||||

### Engineering for Day 2 Ops

|

### Engineering for Day 2 Ops

|

||||||

|

|

||||||

- [] 👷🏻♀️ 84 > [](2023/day84.md)

|

- [] 👷🏻♀️ 84 > [Writing an API - What is an API?](2023/day84.md)

|

||||||

- [] 👷🏻♀️ 85 > [](2023/day85.md)

|

- [] 👷🏻♀️ 85 > [Queues, Queue workers and Tasks (Asynchronous architecture)](2023/day85.md)

|

||||||

- [] 👷🏻♀️ 86 > [Designing for Resilience, Redundancy and Reliability](2023/day86.md)

|

- [] 👷🏻♀️ 86 > [Designing for Resilience, Redundancy and Reliability](2023/day86.md)

|

||||||

- [] 👷🏻♀️ 87 > [](2023/day87.md)

|

- [] 👷🏻♀️ 87 > [](2023/day87.md)

|

||||||

- [] 👷🏻♀️ 88 > [](2023/day88.md)

|

- [] 👷🏻♀️ 88 > [](2023/day88.md)

|

||||||

|

|||||||

44

2023/day2-ops-code/README.md

Normal file

44

2023/day2-ops-code/README.md

Normal file

@ -0,0 +1,44 @@

|

|||||||

|

# Getting started

|

||||||

|

|

||||||

|

This repo expects you to have a working kubernetes cluster already setup and

|

||||||

|

available with kubectl

|

||||||

|

|

||||||

|

We expect you already have a kubernetes cluster setup and available with kubectl and helm.

|

||||||

|

|

||||||

|

I like using (Civo)[https://www.civo.com/] for this as it is easy to setup and run clusters

|

||||||

|

|

||||||

|

The code is available in this folder to build/push your own images if you wish - there are no instructions for this.

|

||||||

|

|

||||||

|

## Start the Database

|

||||||

|

```shell

|

||||||

|

kubectl apply -f database/mysql.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

## deploy the day1 - sync

|

||||||

|

```shell

|

||||||

|

kubectl apply -f synchronous/k8s.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

Check your logs

|

||||||

|

```shell

|

||||||

|

kubectl logs deploy/generator

|

||||||

|

|

||||||

|

kubectl logs deploy/requestor

|

||||||

|

```

|

||||||

|

|

||||||

|

## deploy nats

|

||||||

|

helm repo add nats https://nats-io.github.io/k8s/helm/charts/

|

||||||

|

helm install my-nats nats/nats

|

||||||

|

|

||||||

|

## deploy day 2 - async

|

||||||

|

```shell

|

||||||

|

kubectl apply -f async/k8s.yaml

|

||||||

|

```

|

||||||

|

|

||||||

|

Check your logs

|

||||||

|

```shell

|

||||||

|

kubectl logs deploy/generator

|

||||||

|

|

||||||

|

kubectl logs deploy/requestor

|

||||||

|

```

|

||||||

17

2023/day2-ops-code/async/generator/Dockerfile

Normal file

17

2023/day2-ops-code/async/generator/Dockerfile

Normal file

@ -0,0 +1,17 @@

|

|||||||

|

# Set the base image to use

|

||||||

|

FROM golang:1.17-alpine

|

||||||

|

|

||||||

|

# Set the working directory inside the container

|

||||||

|

WORKDIR /app

|

||||||

|

|

||||||

|

# Copy the source code into the container

|

||||||

|

COPY . .

|

||||||

|

|

||||||

|

# Build the Go application

|

||||||

|

RUN go build -o main .

|

||||||

|

|

||||||

|

# Expose the port that the application will run on

|

||||||

|

EXPOSE 8080

|

||||||

|

|

||||||

|

# Define the command that will run when the container starts

|

||||||

|

CMD ["/app/main"]

|

||||||

17

2023/day2-ops-code/async/generator/go.mod

Normal file

17

2023/day2-ops-code/async/generator/go.mod

Normal file

@ -0,0 +1,17 @@

|

|||||||

|

module main

|

||||||

|

|

||||||

|

go 1.20

|

||||||

|

|

||||||

|

require (

|

||||||

|

github.com/go-sql-driver/mysql v1.7.0

|

||||||

|

github.com/nats-io/nats.go v1.24.0

|

||||||

|

)

|

||||||

|

|

||||||

|

require (

|

||||||

|

github.com/golang/protobuf v1.5.3 // indirect

|

||||||

|

github.com/nats-io/nats-server/v2 v2.9.15 // indirect

|

||||||

|

github.com/nats-io/nkeys v0.3.0 // indirect

|

||||||

|

github.com/nats-io/nuid v1.0.1 // indirect

|

||||||

|

golang.org/x/crypto v0.6.0 // indirect

|

||||||

|

google.golang.org/protobuf v1.30.0 // indirect

|

||||||

|

)

|

||||||

32

2023/day2-ops-code/async/generator/go.sum

Normal file

32

2023/day2-ops-code/async/generator/go.sum

Normal file

@ -0,0 +1,32 @@

|

|||||||

|

github.com/go-sql-driver/mysql v1.7.0 h1:ueSltNNllEqE3qcWBTD0iQd3IpL/6U+mJxLkazJ7YPc=

|

||||||

|

github.com/go-sql-driver/mysql v1.7.0/go.mod h1:OXbVy3sEdcQ2Doequ6Z5BW6fXNQTmx+9S1MCJN5yJMI=

|

||||||

|

github.com/golang/protobuf v1.5.0/go.mod h1:FsONVRAS9T7sI+LIUmWTfcYkHO4aIWwzhcaSAoJOfIk=

|

||||||

|

github.com/golang/protobuf v1.5.3 h1:KhyjKVUg7Usr/dYsdSqoFveMYd5ko72D+zANwlG1mmg=

|

||||||

|

github.com/golang/protobuf v1.5.3/go.mod h1:XVQd3VNwM+JqD3oG2Ue2ip4fOMUkwXdXDdiuN0vRsmY=

|

||||||

|

github.com/google/go-cmp v0.5.5/go.mod h1:v8dTdLbMG2kIc/vJvl+f65V22dbkXbowE6jgT/gNBxE=

|

||||||

|

github.com/klauspost/compress v1.16.0 h1:iULayQNOReoYUe+1qtKOqw9CwJv3aNQu8ivo7lw1HU4=

|

||||||

|

github.com/minio/highwayhash v1.0.2 h1:Aak5U0nElisjDCfPSG79Tgzkn2gl66NxOMspRrKnA/g=

|

||||||

|

github.com/nats-io/jwt/v2 v2.3.0 h1:z2mA1a7tIf5ShggOFlR1oBPgd6hGqcDYsISxZByUzdI=

|

||||||

|

github.com/nats-io/nats-server/v2 v2.9.15 h1:MuwEJheIwpvFgqvbs20W8Ish2azcygjf4Z0liVu2I4c=

|

||||||

|

github.com/nats-io/nats-server/v2 v2.9.15/go.mod h1:QlCTy115fqpx4KSOPFIxSV7DdI6OxtZsGOL1JLdeRlE=

|

||||||

|

github.com/nats-io/nats.go v1.24.0 h1:CRiD8L5GOQu/DcfkmgBcTTIQORMwizF+rPk6T0RaHVQ=

|

||||||

|

github.com/nats-io/nats.go v1.24.0/go.mod h1:dVQF+BK3SzUZpwyzHedXsvH3EO38aVKuOPkkHlv5hXA=

|

||||||

|

github.com/nats-io/nkeys v0.3.0 h1:cgM5tL53EvYRU+2YLXIK0G2mJtK12Ft9oeooSZMA2G8=

|

||||||

|

github.com/nats-io/nkeys v0.3.0/go.mod h1:gvUNGjVcM2IPr5rCsRsC6Wb3Hr2CQAm08dsxtV6A5y4=

|

||||||

|

github.com/nats-io/nuid v1.0.1 h1:5iA8DT8V7q8WK2EScv2padNa/rTESc1KdnPw4TC2paw=

|

||||||

|

github.com/nats-io/nuid v1.0.1/go.mod h1:19wcPz3Ph3q0Jbyiqsd0kePYG7A95tJPxeL+1OSON2c=

|

||||||

|

golang.org/x/crypto v0.0.0-20210314154223-e6e6c4f2bb5b/go.mod h1:T9bdIzuCu7OtxOm1hfPfRQxPLYneinmdGuTeoZ9dtd4=

|

||||||

|

golang.org/x/crypto v0.6.0 h1:qfktjS5LUO+fFKeJXZ+ikTRijMmljikvG68fpMMruSc=

|

||||||

|

golang.org/x/crypto v0.6.0/go.mod h1:OFC/31mSvZgRz0V1QTNCzfAI1aIRzbiufJtkMIlEp58=

|

||||||

|

golang.org/x/net v0.0.0-20210226172049-e18ecbb05110/go.mod h1:m0MpNAwzfU5UDzcl9v0D8zg8gWTRqZa9RBIspLL5mdg=

|

||||||

|

golang.org/x/sys v0.0.0-20201119102817-f84b799fce68/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||||

|

golang.org/x/sys v0.5.0 h1:MUK/U/4lj1t1oPg0HfuXDN/Z1wv31ZJ/YcPiGccS4DU=

|

||||||

|

golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

|

||||||

|

golang.org/x/text v0.3.3/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

|

||||||

|

golang.org/x/time v0.3.0 h1:rg5rLMjNzMS1RkNLzCG38eapWhnYLFYXDXj2gOlr8j4=

|

||||||

|

golang.org/x/tools v0.0.0-20180917221912-90fa682c2a6e/go.mod h1:n7NCudcB/nEzxVGmLbDWY5pfWTLqBcC2KZ6jyYvM4mQ=

|

||||||

|

golang.org/x/xerrors v0.0.0-20191204190536-9bdfabe68543/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

|

||||||

|

google.golang.org/protobuf v1.26.0-rc.1/go.mod h1:jlhhOSvTdKEhbULTjvd4ARK9grFBp09yW+WbY/TyQbw=

|

||||||

|

google.golang.org/protobuf v1.26.0/go.mod h1:9q0QmTI4eRPtz6boOQmLYwt+qCgq0jsYwAQnmE0givc=

|

||||||

|

google.golang.org/protobuf v1.30.0 h1:kPPoIgf3TsEvrm0PFe15JQ+570QVxYzEvvHqChK+cng=

|

||||||

|

google.golang.org/protobuf v1.30.0/go.mod h1:HV8QOd/L58Z+nl8r43ehVNZIU/HEI6OcFqwMG9pJV4I=

|

||||||

100

2023/day2-ops-code/async/generator/main.go

Normal file

100

2023/day2-ops-code/async/generator/main.go

Normal file

@ -0,0 +1,100 @@

|

|||||||

|

package main

|

||||||

|

|

||||||

|

import (

|

||||||

|

"database/sql"

|

||||||

|

"fmt"

|

||||||

|

_ "github.com/go-sql-driver/mysql"

|

||||||

|

nats "github.com/nats-io/nats.go"

|

||||||

|

"math/rand"

|

||||||

|

"time"

|

||||||

|

)

|

||||||

|

|

||||||

|

func generateAndStoreString() (string, error) {

|

||||||

|

// Connect to the database

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

if err != nil {

|

||||||

|

return "", err

|

||||||

|

}

|

||||||

|

defer db.Close()

|

||||||

|

|

||||||

|

// Generate a random string

|

||||||

|

// Define a string of characters to use

|

||||||

|

characters := "abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789"

|

||||||

|

|

||||||

|

// Generate a random string of length 10

|

||||||

|

randomString := make([]byte, 64)

|

||||||

|

for i := range randomString {

|

||||||

|

randomString[i] = characters[rand.Intn(len(characters))]

|

||||||

|

}

|

||||||

|

|

||||||

|

// Insert the random number into the database

|

||||||

|

_, err = db.Exec("INSERT INTO generator_async(random_string) VALUES(?)", string(randomString))

|

||||||

|

if err != nil {

|

||||||

|

return "", err

|

||||||

|

}

|

||||||

|

|

||||||

|

fmt.Printf("Random string %s has been inserted into the database\n", string(randomString))

|

||||||

|

return string(randomString), nil

|

||||||

|

}

|

||||||

|

|

||||||

|

func main() {

|

||||||

|

err := createGeneratordb()

|

||||||

|

if err != nil {

|

||||||

|

panic(err.Error())

|

||||||

|

}

|

||||||

|

|

||||||

|

nc, _ := nats.Connect("nats://my-nats:4222")

|

||||||

|

defer nc.Close()

|

||||||

|

|

||||||

|

nc.Subscribe("generator", func(msg *nats.Msg) {

|

||||||

|

s, err := generateAndStoreString()

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

nc.Publish("generator_reply", []byte(s))

|

||||||

|

nc.Publish("confirmation", []byte(s))

|

||||||

|

})

|

||||||

|

|

||||||

|

nc.Subscribe("confirmation_reply", func(msg *nats.Msg) {

|

||||||

|

stringReceived(string(msg.Data))

|

||||||

|

})

|

||||||

|

// Subscribe to the queue

|

||||||

|

// when a message comes in call generateAndStoreString() then put the string on the

|

||||||

|

// reply queue. also add a message onto the confirmation queue

|

||||||

|

|

||||||

|

// subscribe to the confirmation reply queue

|

||||||

|

// when a message comes in call

|

||||||

|

|

||||||

|

for {

|

||||||

|

time.Sleep(1 * time.Second)

|

||||||

|

}

|

||||||

|

|

||||||

|

}

|

||||||

|

|

||||||

|

func createGeneratordb() error {

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

defer db.Close()

|

||||||

|

|

||||||

|

// try to create a table for us

|

||||||

|

_, err = db.Exec("CREATE TABLE IF NOT EXISTS generator_async(random_string VARCHAR(100), seen BOOLEAN, requested BOOLEAN)")

|

||||||

|

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

func stringReceived(input string) {

|

||||||

|

|

||||||

|

// Connect to the database

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

defer db.Close()

|

||||||

|

|

||||||

|

_, err = db.Exec("UPDATE generator_async SET requested = true WHERE random_string = ?", input)

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

}

|

||||||

69

2023/day2-ops-code/async/k8s.yaml

Normal file

69

2023/day2-ops-code/async/k8s.yaml

Normal file

@ -0,0 +1,69 @@

|

|||||||

|

apiVersion: apps/v1

|

||||||

|

kind: Deployment

|

||||||

|

metadata:

|

||||||

|

name: requestor

|

||||||

|

spec:

|

||||||

|

replicas: 1

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: requestor

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

app: requestor

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: requestor

|

||||||

|

image: heyal/requestor:async

|

||||||

|

imagePullPolicy: Always

|

||||||

|

ports:

|

||||||

|

- containerPort: 8080

|

||||||

|

---

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: requestor-service

|

||||||

|

spec:

|

||||||

|

selector:

|

||||||

|

app: requestor

|

||||||

|

ports:

|

||||||

|

- name: http

|

||||||

|

protocol: TCP

|

||||||

|

port: 8080

|

||||||

|

targetPort: 8080

|

||||||

|

type: ClusterIP

|

||||||

|

---

|

||||||

|

apiVersion: apps/v1

|

||||||

|

kind: Deployment

|

||||||

|

metadata:

|

||||||

|

name: generator

|

||||||

|

spec:

|

||||||

|

replicas: 1

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: generator

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

app: generator

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: generator

|

||||||

|

image: heyal/generator:async

|

||||||

|

imagePullPolicy: Always

|

||||||

|

ports:

|

||||||

|

- containerPort: 8080

|

||||||

|

---

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: generator-service

|

||||||

|

spec:

|

||||||

|

selector:

|

||||||

|

app: generator

|

||||||

|

ports:

|

||||||

|

- name: http

|

||||||

|

protocol: TCP

|

||||||

|

port: 8080

|

||||||

|

targetPort: 8080

|

||||||

|

type: ClusterIP

|

||||||

17

2023/day2-ops-code/async/requestor/Dockerfile

Normal file

17

2023/day2-ops-code/async/requestor/Dockerfile

Normal file

@ -0,0 +1,17 @@

|

|||||||

|

# Set the base image to use

|

||||||

|

FROM golang:1.17-alpine

|

||||||

|

|

||||||

|

# Set the working directory inside the container

|

||||||

|

WORKDIR /app

|

||||||

|

|

||||||

|

# Copy the source code into the container

|

||||||

|

COPY . .

|

||||||

|

|

||||||

|

# Build the Go application

|

||||||

|

RUN go build -o main .

|

||||||

|

|

||||||

|

# Expose the port that the application will run on

|

||||||

|

EXPOSE 8080

|

||||||

|

|

||||||

|

# Define the command that will run when the container starts

|

||||||

|

CMD ["/app/main"]

|

||||||

17

2023/day2-ops-code/async/requestor/go.mod

Normal file

17

2023/day2-ops-code/async/requestor/go.mod

Normal file

@ -0,0 +1,17 @@

|

|||||||

|

module main

|

||||||

|

|

||||||

|

go 1.20

|

||||||

|

|

||||||

|

require (

|

||||||

|

github.com/go-sql-driver/mysql v1.7.0

|

||||||

|

github.com/nats-io/nats.go v1.24.0

|

||||||

|

)

|

||||||

|

|

||||||

|

require (

|

||||||

|

github.com/golang/protobuf v1.5.3 // indirect

|

||||||

|

github.com/nats-io/nats-server/v2 v2.9.15 // indirect

|

||||||

|

github.com/nats-io/nkeys v0.3.0 // indirect

|

||||||

|

github.com/nats-io/nuid v1.0.1 // indirect

|

||||||

|

golang.org/x/crypto v0.6.0 // indirect

|

||||||

|

google.golang.org/protobuf v1.30.0 // indirect

|

||||||

|

)

|

||||||

32

2023/day2-ops-code/async/requestor/go.sum

Normal file

32

2023/day2-ops-code/async/requestor/go.sum

Normal file

@ -0,0 +1,32 @@

|

|||||||

|

github.com/go-sql-driver/mysql v1.7.0 h1:ueSltNNllEqE3qcWBTD0iQd3IpL/6U+mJxLkazJ7YPc=

|

||||||

|

github.com/go-sql-driver/mysql v1.7.0/go.mod h1:OXbVy3sEdcQ2Doequ6Z5BW6fXNQTmx+9S1MCJN5yJMI=

|

||||||

|

github.com/golang/protobuf v1.5.0/go.mod h1:FsONVRAS9T7sI+LIUmWTfcYkHO4aIWwzhcaSAoJOfIk=

|

||||||

|

github.com/golang/protobuf v1.5.3 h1:KhyjKVUg7Usr/dYsdSqoFveMYd5ko72D+zANwlG1mmg=

|

||||||

|

github.com/golang/protobuf v1.5.3/go.mod h1:XVQd3VNwM+JqD3oG2Ue2ip4fOMUkwXdXDdiuN0vRsmY=

|

||||||

|

github.com/google/go-cmp v0.5.5/go.mod h1:v8dTdLbMG2kIc/vJvl+f65V22dbkXbowE6jgT/gNBxE=

|

||||||

|

github.com/klauspost/compress v1.16.0 h1:iULayQNOReoYUe+1qtKOqw9CwJv3aNQu8ivo7lw1HU4=

|

||||||

|

github.com/minio/highwayhash v1.0.2 h1:Aak5U0nElisjDCfPSG79Tgzkn2gl66NxOMspRrKnA/g=

|

||||||

|

github.com/nats-io/jwt/v2 v2.3.0 h1:z2mA1a7tIf5ShggOFlR1oBPgd6hGqcDYsISxZByUzdI=

|

||||||

|

github.com/nats-io/nats-server/v2 v2.9.15 h1:MuwEJheIwpvFgqvbs20W8Ish2azcygjf4Z0liVu2I4c=

|

||||||

|

github.com/nats-io/nats-server/v2 v2.9.15/go.mod h1:QlCTy115fqpx4KSOPFIxSV7DdI6OxtZsGOL1JLdeRlE=

|

||||||

|

github.com/nats-io/nats.go v1.24.0 h1:CRiD8L5GOQu/DcfkmgBcTTIQORMwizF+rPk6T0RaHVQ=

|

||||||

|

github.com/nats-io/nats.go v1.24.0/go.mod h1:dVQF+BK3SzUZpwyzHedXsvH3EO38aVKuOPkkHlv5hXA=

|

||||||

|

github.com/nats-io/nkeys v0.3.0 h1:cgM5tL53EvYRU+2YLXIK0G2mJtK12Ft9oeooSZMA2G8=

|

||||||

|

github.com/nats-io/nkeys v0.3.0/go.mod h1:gvUNGjVcM2IPr5rCsRsC6Wb3Hr2CQAm08dsxtV6A5y4=

|

||||||

|

github.com/nats-io/nuid v1.0.1 h1:5iA8DT8V7q8WK2EScv2padNa/rTESc1KdnPw4TC2paw=

|

||||||

|

github.com/nats-io/nuid v1.0.1/go.mod h1:19wcPz3Ph3q0Jbyiqsd0kePYG7A95tJPxeL+1OSON2c=

|

||||||

|

golang.org/x/crypto v0.0.0-20210314154223-e6e6c4f2bb5b/go.mod h1:T9bdIzuCu7OtxOm1hfPfRQxPLYneinmdGuTeoZ9dtd4=

|

||||||

|

golang.org/x/crypto v0.6.0 h1:qfktjS5LUO+fFKeJXZ+ikTRijMmljikvG68fpMMruSc=

|

||||||

|

golang.org/x/crypto v0.6.0/go.mod h1:OFC/31mSvZgRz0V1QTNCzfAI1aIRzbiufJtkMIlEp58=

|

||||||

|

golang.org/x/net v0.0.0-20210226172049-e18ecbb05110/go.mod h1:m0MpNAwzfU5UDzcl9v0D8zg8gWTRqZa9RBIspLL5mdg=

|

||||||

|

golang.org/x/sys v0.0.0-20201119102817-f84b799fce68/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||||

|

golang.org/x/sys v0.5.0 h1:MUK/U/4lj1t1oPg0HfuXDN/Z1wv31ZJ/YcPiGccS4DU=

|

||||||

|

golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

|

||||||

|

golang.org/x/text v0.3.3/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

|

||||||

|

golang.org/x/time v0.3.0 h1:rg5rLMjNzMS1RkNLzCG38eapWhnYLFYXDXj2gOlr8j4=

|

||||||

|

golang.org/x/tools v0.0.0-20180917221912-90fa682c2a6e/go.mod h1:n7NCudcB/nEzxVGmLbDWY5pfWTLqBcC2KZ6jyYvM4mQ=

|

||||||

|

golang.org/x/xerrors v0.0.0-20191204190536-9bdfabe68543/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

|

||||||

|

google.golang.org/protobuf v1.26.0-rc.1/go.mod h1:jlhhOSvTdKEhbULTjvd4ARK9grFBp09yW+WbY/TyQbw=

|

||||||

|

google.golang.org/protobuf v1.26.0/go.mod h1:9q0QmTI4eRPtz6boOQmLYwt+qCgq0jsYwAQnmE0givc=

|

||||||

|

google.golang.org/protobuf v1.30.0 h1:kPPoIgf3TsEvrm0PFe15JQ+570QVxYzEvvHqChK+cng=

|

||||||

|

google.golang.org/protobuf v1.30.0/go.mod h1:HV8QOd/L58Z+nl8r43ehVNZIU/HEI6OcFqwMG9pJV4I=

|

||||||

108

2023/day2-ops-code/async/requestor/main.go

Normal file

108

2023/day2-ops-code/async/requestor/main.go

Normal file

@ -0,0 +1,108 @@

|

|||||||

|

package main

|

||||||

|

|

||||||

|

import (

|

||||||

|

"database/sql"

|

||||||

|

"errors"

|

||||||

|

"fmt"

|

||||||

|

_ "github.com/go-sql-driver/mysql"

|

||||||

|

nats "github.com/nats-io/nats.go"

|

||||||

|

"time"

|

||||||

|

)

|

||||||

|

|

||||||

|

func storeString(input string) error {

|

||||||

|

// Connect to the database

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

defer db.Close()

|

||||||

|

// Insert the random number into the database

|

||||||

|

_, err = db.Exec("INSERT INTO requestor_async(random_string) VALUES(?)", input)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

fmt.Printf("Random string %s has been inserted into the database\n", input)

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

|

||||||

|

func getStringFromDB(input string) error {

|

||||||

|

// see if the string exists in the db, if so return nil

|

||||||

|

// if not, return an error

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

defer db.Close()

|

||||||

|

result, err := db.Query("SELECT * FROM requestor_async WHERE random_string = ?", input)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

for result.Next() {

|

||||||

|

var randomString string

|

||||||

|

err = result.Scan(&randomString)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

if randomString == input {

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

return errors.New("string not found")

|

||||||

|

}

|

||||||

|

|

||||||

|

func main() {

|

||||||

|

|

||||||

|

err := createRequestordb()

|

||||||

|

if err != nil {

|

||||||

|

panic(err.Error())

|

||||||

|

}

|

||||||

|

// setup a goroutine loop calling the generator every minute, saving the result in the DB

|

||||||

|

|

||||||

|

nc, _ := nats.Connect("nats://my-nats:4222")

|

||||||

|

defer nc.Close()

|

||||||

|

|

||||||

|

ticker := time.NewTicker(60 * time.Second)

|

||||||

|

quit := make(chan struct{})

|

||||||

|

go func() {

|

||||||

|

for {

|

||||||

|

select {

|

||||||

|

case <-ticker.C:

|

||||||

|

nc.Publish("generator", []byte(""))

|

||||||

|

case <-quit:

|

||||||

|

ticker.Stop()

|

||||||

|

return

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}()

|

||||||

|

|

||||||

|

nc.Subscribe("generator_reply", func(msg *nats.Msg) {

|

||||||

|

err := storeString(string(msg.Data))

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

})

|

||||||

|

|

||||||

|

nc.Subscribe("confirmation", func(msg *nats.Msg) {

|

||||||

|

err := getStringFromDB(string(msg.Data))

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

nc.Publish("confirmation_reply", []byte(string(msg.Data)))

|

||||||

|

})

|

||||||

|

// create a goroutine here to listen for messages on the queue to check, see if we have them

|

||||||

|

|

||||||

|

for {

|

||||||

|

time.Sleep(10 * time.Second)

|

||||||

|

}

|

||||||

|

|

||||||

|

}

|

||||||

|

|

||||||

|

func createRequestordb() error {

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

defer db.Close()

|

||||||

|

|

||||||

|

// try to create a table for us

|

||||||

|

_, err = db.Exec("CREATE TABLE IF NOT EXISTS requestor_async(random_string VARCHAR(100))")

|

||||||

|

|

||||||

|

return err

|

||||||

|

}

|

||||||

2

2023/day2-ops-code/buildpush.sh

Executable file

2

2023/day2-ops-code/buildpush.sh

Executable file

@ -0,0 +1,2 @@

|

|||||||

|

docker build ./async/requestor/ -f async/requestor/Dockerfile -t heyal/requestor:async && docker push heyal/requestor:async

|

||||||

|

docker build ./async/generator/ -f async/generator/Dockerfile -t heyal/generator:async&& docker push heyal/generator:async

|

||||||

77

2023/day2-ops-code/database/mysql.yaml

Normal file

77

2023/day2-ops-code/database/mysql.yaml

Normal file

@ -0,0 +1,77 @@

|

|||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: mysql

|

||||||

|

spec:

|

||||||

|

ports:

|

||||||

|

- port: 3306

|

||||||

|

selector:

|

||||||

|

app: mysql

|

||||||

|

clusterIP: None

|

||||||

|

---

|

||||||

|

apiVersion: apps/v1

|

||||||

|

kind: Deployment

|

||||||

|

metadata:

|

||||||

|

name: mysql

|

||||||

|

spec:

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: mysql

|

||||||

|

strategy:

|

||||||

|

type: Recreate

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

app: mysql

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- image: mysql:5.6

|

||||||

|

name: mysql

|

||||||

|

env:

|

||||||

|

# Use secret in real usage

|

||||||

|

- name: MYSQL_ROOT_PASSWORD

|

||||||

|

value: password

|

||||||

|

- name: MYSQL_DATABASE

|

||||||

|

value: example

|

||||||

|

- name: MYSQL_USER

|

||||||

|

value: example

|

||||||

|

- name: MYSQL_PASSWORD

|

||||||

|

value: password

|

||||||

|

ports:

|

||||||

|

- containerPort: 3306

|

||||||

|

name: mysql

|

||||||

|

volumeMounts:

|

||||||

|

- name: mysql-persistent-storage

|

||||||

|

mountPath: /var/lib/mysql

|

||||||

|

volumes:

|

||||||

|

- name: mysql-persistent-storage

|

||||||

|

persistentVolumeClaim:

|

||||||

|

claimName: mysql-pv-claim

|

||||||

|

---

|

||||||

|

apiVersion: v1

|

||||||

|

kind: PersistentVolume

|

||||||

|

metadata:

|

||||||

|

name: mysql-pv-volume

|

||||||

|

labels:

|

||||||

|

type: local

|

||||||

|

spec:

|

||||||

|

storageClassName: manual

|

||||||

|

capacity:

|

||||||

|

storage: 20Gi

|

||||||

|

accessModes:

|

||||||

|

- ReadWriteOnce

|

||||||

|

hostPath:

|

||||||

|

path: "/mnt/data"

|

||||||

|

---

|

||||||

|

apiVersion: v1

|

||||||

|

kind: PersistentVolumeClaim

|

||||||

|

metadata:

|

||||||

|

name: mysql-pv-claim

|

||||||

|

spec:

|

||||||

|

storageClassName: manual

|

||||||

|

accessModes:

|

||||||

|

- ReadWriteOnce

|

||||||

|

resources:

|

||||||

|

requests:

|

||||||

|

storage: 10Gi

|

||||||

|

# https://kubernetes.io/docs/tasks/run-application/run-single-instance-stateful-application/

|

||||||

17

2023/day2-ops-code/synchronous/generator/Dockerfile

Normal file

17

2023/day2-ops-code/synchronous/generator/Dockerfile

Normal file

@ -0,0 +1,17 @@

|

|||||||

|

# Set the base image to use

|

||||||

|

FROM golang:1.17-alpine

|

||||||

|

|

||||||

|

# Set the working directory inside the container

|

||||||

|

WORKDIR /app

|

||||||

|

|

||||||

|

# Copy the source code into the container

|

||||||

|

COPY . .

|

||||||

|

|

||||||

|

# Build the Go application

|

||||||

|

RUN go build -o main .

|

||||||

|

|

||||||

|

# Expose the port that the application will run on

|

||||||

|

EXPOSE 8080

|

||||||

|

|

||||||

|

# Define the command that will run when the container starts

|

||||||

|

CMD ["/app/main"]

|

||||||

5

2023/day2-ops-code/synchronous/generator/go.mod

Normal file

5

2023/day2-ops-code/synchronous/generator/go.mod

Normal file

@ -0,0 +1,5 @@

|

|||||||

|

module main

|

||||||

|

|

||||||

|

go 1.20

|

||||||

|

|

||||||

|

require github.com/go-sql-driver/mysql v1.7.0

|

||||||

2

2023/day2-ops-code/synchronous/generator/go.sum

Normal file

2

2023/day2-ops-code/synchronous/generator/go.sum

Normal file

@ -0,0 +1,2 @@

|

|||||||

|

github.com/go-sql-driver/mysql v1.7.0 h1:ueSltNNllEqE3qcWBTD0iQd3IpL/6U+mJxLkazJ7YPc=

|

||||||

|

github.com/go-sql-driver/mysql v1.7.0/go.mod h1:OXbVy3sEdcQ2Doequ6Z5BW6fXNQTmx+9S1MCJN5yJMI=

|

||||||

139

2023/day2-ops-code/synchronous/generator/main.go

Normal file

139

2023/day2-ops-code/synchronous/generator/main.go

Normal file

@ -0,0 +1,139 @@

|

|||||||

|

package main

|

||||||

|

|

||||||

|

import (

|

||||||

|

"database/sql"

|

||||||

|

"fmt"

|

||||||

|

_ "github.com/go-sql-driver/mysql"

|

||||||

|

"math/rand"

|

||||||

|

"net/http"

|

||||||

|

"time"

|

||||||

|

)

|

||||||

|

|

||||||

|

func generateAndStoreString() (string, error) {

|

||||||

|

// Connect to the database

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

if err != nil {

|

||||||

|

return "", err

|

||||||

|

}

|

||||||

|

defer db.Close()

|

||||||

|

|

||||||

|

// Generate a random string

|

||||||

|

// Define a string of characters to use

|

||||||

|

characters := "abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789"

|

||||||

|

|

||||||

|

// Generate a random string of length 10

|

||||||

|

randomString := make([]byte, 64)

|

||||||

|

for i := range randomString {

|

||||||

|

randomString[i] = characters[rand.Intn(len(characters))]

|

||||||

|

}

|

||||||

|

|

||||||

|

// Insert the random number into the database

|

||||||

|

_, err = db.Exec("INSERT INTO generator(random_string) VALUES(?)", string(randomString))

|

||||||

|

if err != nil {

|

||||||

|

return "", err

|

||||||

|

}

|

||||||

|

|

||||||

|

fmt.Printf("Random string %s has been inserted into the database\n", string(randomString))

|

||||||

|

return string(randomString), nil

|

||||||

|

}

|

||||||

|

|

||||||

|

func main() {

|

||||||

|

// Create a new HTTP server

|

||||||

|

server := &http.Server{

|

||||||

|

Addr: ":8080",

|

||||||

|

}

|

||||||

|

|

||||||

|

err := createGeneratordb()

|

||||||

|

if err != nil {

|

||||||

|

panic(err.Error())

|

||||||

|

}

|

||||||

|

|

||||||

|

ticker := time.NewTicker(60 * time.Second)

|

||||||

|

quit := make(chan struct{})

|

||||||

|

go func() {

|

||||||

|

for {

|

||||||

|

select {

|

||||||

|

case <-ticker.C:

|

||||||

|

checkStringReceived()

|

||||||

|

case <-quit:

|

||||||

|

ticker.Stop()

|

||||||

|

return

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}()

|

||||||

|

|

||||||

|

// Handle requests to /generate

|

||||||

|

http.HandleFunc("/new", func(w http.ResponseWriter, r *http.Request) {

|

||||||

|

// Generate a random number

|

||||||

|

randomString, err := generateAndStoreString()

|

||||||

|

if err != nil {

|

||||||

|

http.Error(w, "unable to generate and save random string", http.StatusInternalServerError)

|

||||||

|

return

|

||||||

|

}

|

||||||

|

|

||||||

|

print(fmt.Sprintf("random string: %s", randomString))

|

||||||

|

w.Write([]byte(randomString))

|

||||||

|

})

|

||||||

|

|

||||||

|

// Start the server

|

||||||

|

fmt.Println("Listening on port 8080")

|

||||||

|

err = server.ListenAndServe()

|

||||||

|

if err != nil {

|

||||||

|

panic(err.Error())

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

func createGeneratordb() error {

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

defer db.Close()

|

||||||

|

|

||||||

|

// try to create a table for us

|

||||||

|

_, err = db.Exec("CREATE TABLE IF NOT EXISTS generator(random_string VARCHAR(100), seen BOOLEAN)")

|

||||||

|

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

func checkStringReceived() {

|

||||||

|

// get a list of strings from database that dont have the "seen" bool set top true

|

||||||

|

// loop over them and make a call to the requestor's 'check' endpoint and if we get a 200 then set seen to true

|

||||||

|

|

||||||

|

// Connect to the database

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

defer db.Close()

|

||||||

|

|

||||||

|

// Insert the random number into the database

|

||||||

|

results, err := db.Query("SELECT random_string FROM generator WHERE seen IS NOT true")

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

|

||||||

|

// loop over results

|

||||||

|

for results.Next() {

|

||||||

|

var randomString string

|

||||||

|

err = results.Scan(&randomString)

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

|

||||||

|

// make a call to the requestor's 'check' endpoint

|

||||||

|

// if we get a 200 then set seen to true

|

||||||

|

r, err := http.Get("http://requestor-service:8080/check/" + randomString)

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

if r.StatusCode == 200 {

|

||||||

|

_, err = db.Exec("UPDATE generator SET seen = true WHERE random_string = ?", randomString)

|

||||||

|

if err != nil {

|

||||||

|

print(err)

|

||||||

|

}

|

||||||

|

} else {

|

||||||

|

fmt.Println(fmt.Sprintf("Random string has not been received by the requestor: %s", randomString))

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

69

2023/day2-ops-code/synchronous/k8s.yaml

Normal file

69

2023/day2-ops-code/synchronous/k8s.yaml

Normal file

@ -0,0 +1,69 @@

|

|||||||

|

apiVersion: apps/v1

|

||||||

|

kind: Deployment

|

||||||

|

metadata:

|

||||||

|

name: requestor

|

||||||

|

spec:

|

||||||

|

replicas: 1

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: requestor

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

app: requestor

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: requestor

|

||||||

|

image: heyal/requestor:sync

|

||||||

|

imagePullPolicy: Always

|

||||||

|

ports:

|

||||||

|

- containerPort: 8080

|

||||||

|

---

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: requestor-service

|

||||||

|

spec:

|

||||||

|

selector:

|

||||||

|

app: requestor

|

||||||

|

ports:

|

||||||

|

- name: http

|

||||||

|

protocol: TCP

|

||||||

|

port: 8080

|

||||||

|

targetPort: 8080

|

||||||

|

type: ClusterIP

|

||||||

|

---

|

||||||

|

apiVersion: apps/v1

|

||||||

|

kind: Deployment

|

||||||

|

metadata:

|

||||||

|

name: generator

|

||||||

|

spec:

|

||||||

|

replicas: 1

|

||||||

|

selector:

|

||||||

|

matchLabels:

|

||||||

|

app: generator

|

||||||

|

template:

|

||||||

|

metadata:

|

||||||

|

labels:

|

||||||

|

app: generator

|

||||||

|

spec:

|

||||||

|

containers:

|

||||||

|

- name: generator

|

||||||

|

image: heyal/generator:sync

|

||||||

|

imagePullPolicy: Always

|

||||||

|

ports:

|

||||||

|

- containerPort: 8080

|

||||||

|

---

|

||||||

|

apiVersion: v1

|

||||||

|

kind: Service

|

||||||

|

metadata:

|

||||||

|

name: generator-service

|

||||||

|

spec:

|

||||||

|

selector:

|

||||||

|

app: generator

|

||||||

|

ports:

|

||||||

|

- name: http

|

||||||

|

protocol: TCP

|

||||||

|

port: 8080

|

||||||

|

targetPort: 8080

|

||||||

|

type: ClusterIP

|

||||||

17

2023/day2-ops-code/synchronous/requestor/Dockerfile

Normal file

17

2023/day2-ops-code/synchronous/requestor/Dockerfile

Normal file

@ -0,0 +1,17 @@

|

|||||||

|

# Set the base image to use

|

||||||

|

FROM golang:1.17-alpine

|

||||||

|

|

||||||

|

# Set the working directory inside the container

|

||||||

|

WORKDIR /app

|

||||||

|

|

||||||

|

# Copy the source code into the container

|

||||||

|

COPY . .

|

||||||

|

|

||||||

|

# Build the Go application

|

||||||

|

RUN go build -o main .

|

||||||

|

|

||||||

|

# Expose the port that the application will run on

|

||||||

|

EXPOSE 8080

|

||||||

|

|

||||||

|

# Define the command that will run when the container starts

|

||||||

|

CMD ["/app/main"]

|

||||||

5

2023/day2-ops-code/synchronous/requestor/go.mod

Normal file

5

2023/day2-ops-code/synchronous/requestor/go.mod

Normal file

@ -0,0 +1,5 @@

|

|||||||

|

module main

|

||||||

|

|

||||||

|

go 1.20

|

||||||

|

|

||||||

|

require github.com/go-sql-driver/mysql v1.7.0

|

||||||

2

2023/day2-ops-code/synchronous/requestor/go.sum

Normal file

2

2023/day2-ops-code/synchronous/requestor/go.sum

Normal file

@ -0,0 +1,2 @@

|

|||||||

|

github.com/go-sql-driver/mysql v1.7.0 h1:ueSltNNllEqE3qcWBTD0iQd3IpL/6U+mJxLkazJ7YPc=

|

||||||

|

github.com/go-sql-driver/mysql v1.7.0/go.mod h1:OXbVy3sEdcQ2Doequ6Z5BW6fXNQTmx+9S1MCJN5yJMI=

|

||||||

134

2023/day2-ops-code/synchronous/requestor/main.go

Normal file

134

2023/day2-ops-code/synchronous/requestor/main.go

Normal file

@ -0,0 +1,134 @@

|

|||||||

|

package main

|

||||||

|

|

||||||

|

import (

|

||||||

|

"database/sql"

|

||||||

|

"errors"

|

||||||

|

"fmt"

|

||||||

|

_ "github.com/go-sql-driver/mysql"

|

||||||

|

"io"

|

||||||

|

"net/http"

|

||||||

|

"strings"

|

||||||

|

"time"

|

||||||

|

)

|

||||||

|

|

||||||

|

func storeString(input string) error {

|

||||||

|

// Connect to the database

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

defer db.Close()

|

||||||

|

// Insert the random number into the database

|

||||||

|

_, err = db.Exec("INSERT INTO requestor(random_string) VALUES(?)", input)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

fmt.Printf("Random string %s has been inserted into the database\n", input)

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

|

||||||

|

func getStringFromDB(input string) error {

|

||||||

|

// see if the string exists in the db, if so return nil

|

||||||

|

// if not, return an error

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

defer db.Close()

|

||||||

|

result, err := db.Query("SELECT * FROM requestor WHERE random_string = ?", input)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

|

||||||

|

for result.Next() {

|

||||||

|

var randomString string

|

||||||

|

err = result.Scan(&randomString)

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

if randomString == input {

|

||||||

|

return nil

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

return errors.New("string not found")

|

||||||

|

}

|

||||||

|

|

||||||

|

func getStringFromGenerator() {

|

||||||

|

// make a request to the generator

|

||||||

|

// save sthe string to db

|

||||||

|

r, err := http.Get("http://generator-service:8080/new")

|

||||||

|

if err != nil {

|

||||||

|

fmt.Println(err)

|

||||||

|

return

|

||||||

|

}

|

||||||

|

|

||||||

|

body, err := io.ReadAll(r.Body)

|

||||||

|

if err != nil {

|

||||||

|

fmt.Println(err)

|

||||||

|

return

|

||||||

|

}

|

||||||

|

|

||||||

|

fmt.Println(fmt.Sprintf("body from generator: %s", string(body)))

|

||||||

|

|

||||||

|

storeString(string(body))

|

||||||

|

|

||||||

|

}

|

||||||

|

|

||||||

|

func main() {

|

||||||

|

// setup a goroutine loop calling the generator every minute, saving the result in the DB

|

||||||

|

|

||||||

|

ticker := time.NewTicker(60 * time.Second)

|

||||||

|

quit := make(chan struct{})

|

||||||

|

go func() {

|

||||||

|

for {

|

||||||

|

select {

|

||||||

|

case <-ticker.C:

|

||||||

|

getStringFromGenerator()

|

||||||

|

case <-quit:

|

||||||

|

ticker.Stop()

|

||||||

|

return

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}()

|

||||||

|

|

||||||

|

// Create a new HTTP server

|

||||||

|

server := &http.Server{

|

||||||

|

Addr: ":8080",

|

||||||

|

}

|

||||||

|

|

||||||

|

err := createRequestordb()

|

||||||

|

if err != nil {

|

||||||

|

panic(err.Error())

|

||||||

|

}

|

||||||

|

|

||||||

|

// Handle requests to /generate

|

||||||

|

http.HandleFunc("/check/", func(w http.ResponseWriter, r *http.Request) {

|

||||||

|

// get the value after check from the url

|

||||||

|

id := strings.TrimPrefix(r.URL.Path, "/check/")

|

||||||

|

|

||||||

|

// check if it exists in the db

|

||||||

|

err := getStringFromDB(id)

|

||||||

|

if err != nil {

|

||||||

|

http.Error(w, "string not found", http.StatusNotFound)

|

||||||

|

return

|

||||||

|

}

|

||||||

|

|

||||||

|

fmt.Fprintf(w, "string found", http.StatusOK)

|

||||||

|

})

|

||||||

|

|

||||||

|

// Start the server

|

||||||

|

fmt.Println("Listening on port 8080")

|

||||||

|

err = server.ListenAndServe()

|

||||||

|

if err != nil {

|

||||||

|

panic(err.Error())

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

func createRequestordb() error {

|

||||||

|

db, err := sql.Open("mysql", "root:password@tcp(mysql:3306)/mysql")

|

||||||

|

if err != nil {

|

||||||

|

return err

|

||||||

|

}

|

||||||

|

defer db.Close()

|

||||||

|

|

||||||

|

// try to create a table for us

|

||||||

|

_, err = db.Exec("CREATE TABLE IF NOT EXISTS requestor(random_string VARCHAR(100))")

|

||||||

|

|

||||||

|

return err

|

||||||

|

}

|

||||||

@ -71,6 +71,16 @@ Auditing Kubernetes configuration is simple and there are multiple tools you can

|

|||||||

|

|

||||||

|

|

||||||

We will see the simple way to scan our cluster with Kubescape:

|

We will see the simple way to scan our cluster with Kubescape:

|

||||||

|

|

||||||

|

```

|

||||||

|

kubescape installation in macOs M1 and M2 chip error fixed

|

||||||

|

|

||||||

|

[kubescape](https://github.com/kubescape/kubescape)

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

curl -s https://raw.githubusercontent.com/kubescape/kubescape/master/install.sh | /bin/bash

|

curl -s https://raw.githubusercontent.com/kubescape/kubescape/master/install.sh | /bin/bash

|

||||||

kubescape scan --enable-host-scan --verbose

|

kubescape scan --enable-host-scan --verbose

|

||||||

|

|||||||

@ -67,4 +67,6 @@ Cilium is a Container Networking Interface that leverages eBPF to optimize packe

|

|||||||

### Conclusion

|

### Conclusion

|

||||||

A serivce mesh is a power application networking layer that provides traffic management, observability, and security. We will explore more in the next 6 days of #90DayofDevOps!

|

A serivce mesh is a power application networking layer that provides traffic management, observability, and security. We will explore more in the next 6 days of #90DayofDevOps!

|

||||||

|

|

||||||

|

Want to get deeper into Service Mesh? Head over to [#70DaysofServiceMesh](https://github.com/distributethe6ix/70DaysOfServiceMesh)!

|

||||||

|

|

||||||

See you in [Day 78](day78.md).

|

See you in [Day 78](day78.md).

|

||||||

|

|||||||

@ -229,4 +229,6 @@ Let's label our default namespace with the *istio-injection=enabled* label. This

|

|||||||

### Conclusion

|

### Conclusion

|

||||||

I decided to jump into getting a service mesh up and online. It's easy enough if you have the right pieces in place, like a Kubernetes cluster and a load-balancer service. Using the demo profile, you can have Istiod, and the Ingress/Egress gateway deployed. Deploy a sample app with a service definition, and you can expose it via the Ingress-Gateway and route to it using a virtual service.

|

I decided to jump into getting a service mesh up and online. It's easy enough if you have the right pieces in place, like a Kubernetes cluster and a load-balancer service. Using the demo profile, you can have Istiod, and the Ingress/Egress gateway deployed. Deploy a sample app with a service definition, and you can expose it via the Ingress-Gateway and route to it using a virtual service.

|

||||||

|

|

||||||

|

Want to get deeper into Service Mesh? Head over to [#70DaysofServiceMesh](https://github.com/distributethe6ix/70DaysOfServiceMesh)!

|

||||||

|

|

||||||

See you on [Day 79](day79.md) and beyond of #90DaysofServiceMesh

|

See you on [Day 79](day79.md) and beyond of #90DaysofServiceMesh

|

||||||

|

|||||||

@ -66,4 +66,6 @@ Governance and Oversight | Istio Community | Linkered Community | AWS | Hashicor

|

|||||||

### Conclusion

|

### Conclusion

|

||||||

Service Meshes have come a long way in terms of capabilities and the environments they support. Istio appears to be the most feature-complete service mesh, providing a balance of platform support, customizability, extensibility, and is most production ready. Linkered trails right behind with a lighter-weight approach, and is mostly complete as a service mesh. AppMesh is mostly feature-filled but specific to the AWS Ecosystem. Consul is a great contender to Istio and Linkered. The Cilium CNI is taking the approach of using eBPF and climbing up the networking stack to address Service Mesh capabilities, but it has a lot of catching up to do.

|

Service Meshes have come a long way in terms of capabilities and the environments they support. Istio appears to be the most feature-complete service mesh, providing a balance of platform support, customizability, extensibility, and is most production ready. Linkered trails right behind with a lighter-weight approach, and is mostly complete as a service mesh. AppMesh is mostly feature-filled but specific to the AWS Ecosystem. Consul is a great contender to Istio and Linkered. The Cilium CNI is taking the approach of using eBPF and climbing up the networking stack to address Service Mesh capabilities, but it has a lot of catching up to do.

|

||||||

|

|

||||||

|

Want to get deeper into Service Mesh? Head over to [#70DaysofServiceMesh](https://github.com/distributethe6ix/70DaysOfServiceMesh)!

|

||||||

|

|

||||||

See you on [Day 80](day80.md) of #70DaysOfServiceMesh!

|

See you on [Day 80](day80.md) of #70DaysOfServiceMesh!

|

||||||

@ -334,4 +334,6 @@ I briefly covered several traffic management components that allow requests to f

|

|||||||

|

|

||||||

And I got to show you all of this in action!

|

And I got to show you all of this in action!

|

||||||

|

|

||||||

|

Want to get deeper into Service Mesh Traffic Engineering? Head over to [#70DaysofServiceMesh](https://github.com/distributethe6ix/70DaysOfServiceMesh)!

|

||||||

|

|

||||||

See you on [Day 81](day81.md) and beyond! :smile:!

|

See you on [Day 81](day81.md) and beyond! :smile:!

|

||||||

|

|||||||

@ -201,4 +201,6 @@ Go ahead and end the Kiali dashboard process with *ctrl+c*.

|

|||||||

### Conclusion

|

### Conclusion

|

||||||

I've explored a few of the tools to be able to understand how we can observe services in our mesh and better understand how our applications are performing, or, if there are any issues.

|

I've explored a few of the tools to be able to understand how we can observe services in our mesh and better understand how our applications are performing, or, if there are any issues.

|

||||||

|

|

||||||

|

Want to get deeper into Service Mesh Observability? Head over to [#70DaysofServiceMesh](https://github.com/distributethe6ix/70DaysOfServiceMesh)!

|

||||||

|

|

||||||

See you on [Day 82](day82.md)

|

See you on [Day 82](day82.md)

|

||||||

|

|||||||

289

2023/day82.md

289

2023/day82.md

@ -0,0 +1,289 @@

|

|||||||

|

## Day 82 - Securing your microservices

|

||||||

|

> **Tutorial**

|

||||||

|

>> *Let's secure our microservices*

|

||||||

|

|

||||||

|

Security is an extensive area when in comes to microservices. I could basically spend an entire 70days on just security alone but, I want to get to the specifics of testing this out in a lab environment with a Service Mesh.

|

||||||

|

|

||||||

|

Before I do, what security concerns am I addressing with a Service Mesh?

|

||||||

|

- Identity

|

||||||

|

- Policy

|

||||||

|

- Authorization

|

||||||

|

- Authentication

|

||||||

|

- Encrpytion

|

||||||

|

- Data Loss Prevention.

|

||||||

|

|

||||||

|

There's plenty to dig into with these but what specifically of Service Mesh helps us acheive these?

|

||||||

|

|

||||||

|

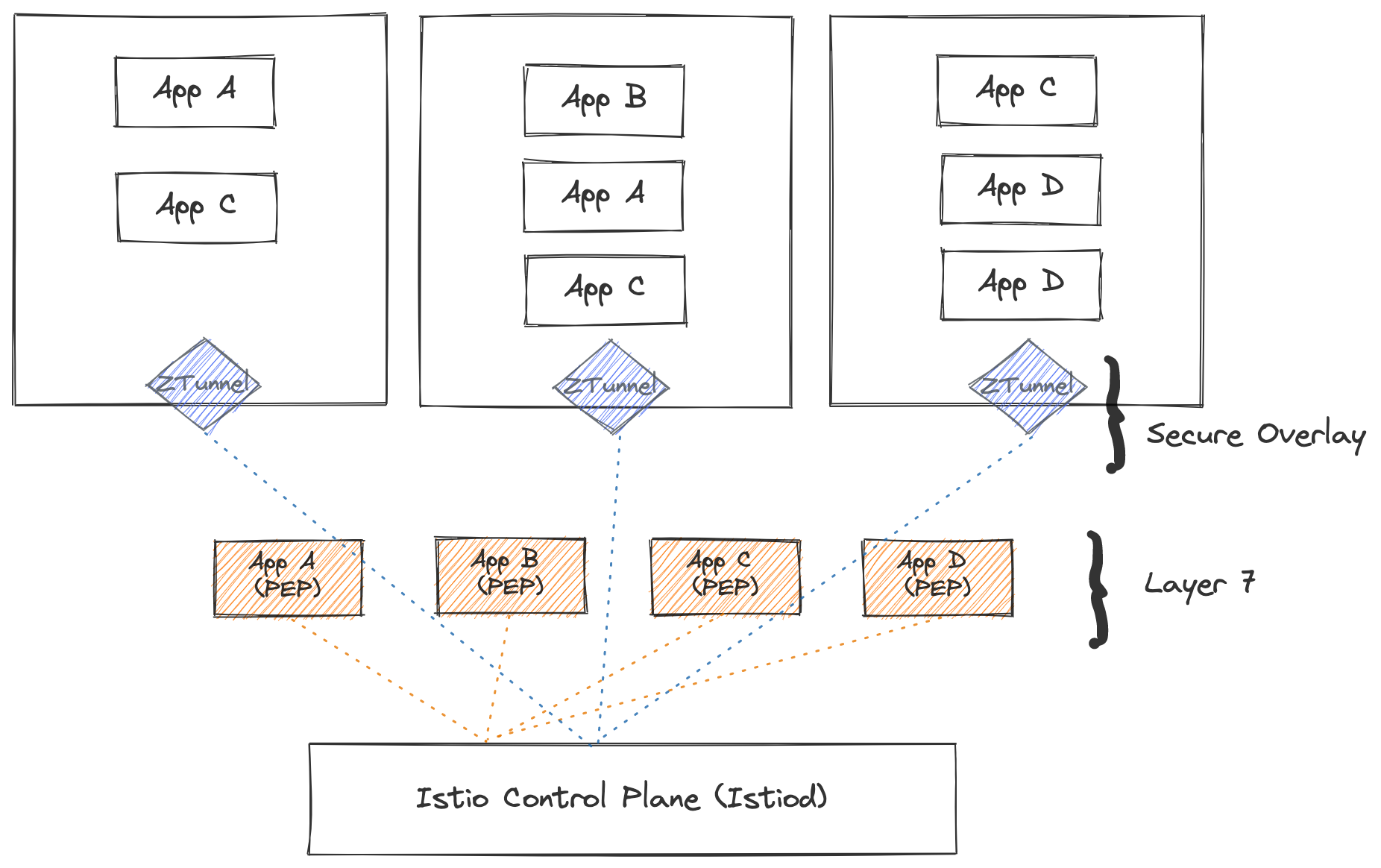

The sidecar, an ingress gateway, a node-level proxy, and the service mesh control plane interacting with the Kubernetes layer.

|

||||||

|

|

||||||

|

As a security operator, I may issue policy configurations, or authentication configurations to the Istio control plane which in turn provides this to the Kubernetes API to turn these into runtime configurations for pods and other resources.

|

||||||

|

|

||||||

|

In Kubernetes, the CNI layer may be able to provide a limited amount of network policy and encryption. Looking at a service mesh, encryption can be provided through mutual-TLS, or mTLS for service-to-service communication, and this same layer can provide a mechanism for Authentication using strong identities in SPIFFE ID format. Layer 7 Authorization is another capability of a service mesh. We can authorize certain services to perform actions (HTTP operations) against other services.

|

||||||

|

|

||||||

|

mTLS is used to authenticate peers in both directions; more on mTLS and TLS in later days.

|

||||||

|

|

||||||

|

To simplify this, Authentication is about having keys to unlock and enter through the door, and Authorization is about what you are allowed to do/touch, once you're in. Defence in Depth.

|

||||||

|

|

||||||

|

Let's review what Istio offers and proceed to configure some of this. We will explore some of these in greater detail in future days.

|

||||||

|

|

||||||

|

### Istio Peer Authentication and mTLS

|

||||||

|

One of the key aspects of the Istio service mesh is its ability to issue and manage identity for workloads that are deployed into the mesh. To put it into perspective, if all services have a sidecar, and are issued an identity (it's own identity) from the Istiod control plane, a new ability to trust and verify services now exists. This is how Peer Authentication is achieved using mTLS. I plan to go into lots more details in future modules.

|

||||||

|

|

||||||

|

In Istio, Peer Authentication must be configured for services and can be scoped to workloads, namespaces, or the entire mesh.

|

||||||

|

|

||||||

|

There are three modes, I'll explain them briefly and we'll get to configuring!

|

||||||

|

* PERMISSIVE: for when you have plaintext AND encrypted traffic. Migration-oriented

|

||||||

|

* STRICT: Only mTLS enabled workloads

|

||||||

|

* DISABLE: No mTLS at all.

|

||||||

|

|

||||||

|

We can also take care of End-user Auth using JWT (JSON Web Tokens) but I'll explore this later.

|

||||||

|

|

||||||

|

### Configuring Istio Peer AuthN and Strict mTLS

|

||||||

|

Let's get to configuring our environment with Peer Authentication and verify.

|

||||||

|

|

||||||

|

We already have our environment ready to go so we just need to deploy another sample app that won't have the sidecar, and we also need to turn up an Authentication policy.

|

||||||

|

|

||||||

|

Let's deploy a new namespace called sleep and deploy a sleeper pod to it.

|

||||||

|

```

|

||||||

|

kubectl create ns sleep

|

||||||

|

```

|

||||||

|

```

|

||||||

|

kubectl get ns

|

||||||

|

```

|

||||||

|

```

|

||||||

|

cd istio-1.16.1

|

||||||

|

kubectl apply -f samples/sleep/sleep.yaml -n sleep

|

||||||

|

```

|

||||||

|

|

||||||

|

Let's test to make sure the sleeper pod can communicate with the bookinfo app!

|

||||||

|

This command simply execs into the name of the sleep pod with the "app=sleep" label in the sleep namespace and proceeds to curl productpage in the default namespace.

|

||||||

|

The status code should be 200!

|

||||||

|

```

|

||||||

|

kubectl exec "$(kubectl get pod -l app=sleep -n sleep -o jsonpath={.items..metadata.name})" -c sleep -n sleep -- curl productpage.default.svc.cluster.local:9080 -s -o /dev/null -w "%{http_code}\n"

|

||||||

|

```

|

||||||

|

```

|

||||||

|

200

|

||||||

|

```

|

||||||

|

|

||||||

|

Let's apply our PeerAuthentication

|

||||||

|

```

|

||||||

|

kubectl apply -f - <<EOF

|

||||||

|

apiVersion: security.istio.io/v1beta1

|

||||||

|

kind: PeerAuthentication

|

||||||

|

metadata:

|

||||||

|

name: "default"

|

||||||

|

namespace: "istio-system"

|

||||||

|

spec:

|

||||||

|

mtls:

|

||||||

|

mode: STRICT

|

||||||

|

EOF

|

||||||

|

```

|

||||||

|

|

||||||

|

Now, if we re-run the curl command as I did previously (I just up-arrowed but you can copy and paste from above), it will fail with exit code 56. This strongly implies that peer-authentication in STRICT mode disallows non-mTLS workloads from communicating with mTLS workloads.

|

||||||

|

|

||||||

|

As I mentioned previously, I'll expand further in future days.

|

||||||

|

|

||||||

|

### Istio Layer 7 Authorization

|

||||||

|

Authorization is a very interesting area of security, mainly because we can granularly control who can perform whatever action against another resource. More specifically, what HTTP operations can one service perform against another. Can a service *GET* data from another service using HTTP operations?

|

||||||

|

|

||||||

|

I'll briefly explore a simple Authorization policy that allows GET requests but disallows DELETE requests against a resource. This is a highly granular approach to Zero-trust.

|

||||||

|

|

||||||

|

A consideration when moving towards production is to use a tool like Kiali (or others) to create a service mapping and understand the various HTTP calls made between services. This will allow you to establish incremental policies.

|

||||||

|

|

||||||

|

The one key element here is that Envoy (or Proxyv2 for Istio) as a sidecar, is the policy enforcement point for all Authorization Policies. Policies are evaluated by the authorization engine that authorizes requests at runtime. The destination sidecar, or Envoy, is responsible for evaluating this.

|

||||||

|

|

||||||

|

Let's get to configuring!

|

||||||

|

|

||||||

|

|

||||||

|

### Configuring L7 Authorization Policies

|

||||||

|

|

||||||

|

In order to get a policy up and running, we first need to deny all HTTP-based based operations.

|

||||||

|

|

||||||

|

Also, the flow of the request looks like this:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

The lock-icon is indicative of the fact that mTLS is enabled and ready to go.

|

||||||

|

|

||||||

|

Let's DENY ALL

|

||||||

|

```

|

||||||